Introduction

The goal of the competition is to find good sim2real methods. In the competition task, the robot needs to find 5 goals whose positions are randomly generated in the field, and attack the defensive robot by shooting bullets. The competition will be divided into two stages: in the first stage, the agent only needs to complete the above tasks in the simulation environment. In the second stage, we deploy the participating agents to the physical robot and test them in a real environment. At this stage, the agent needs to deal with the difference between the simulated robot actuator and the physical actuator, and the state difference caused by the environment. The agent can adjust the model according to the feedback data and results.

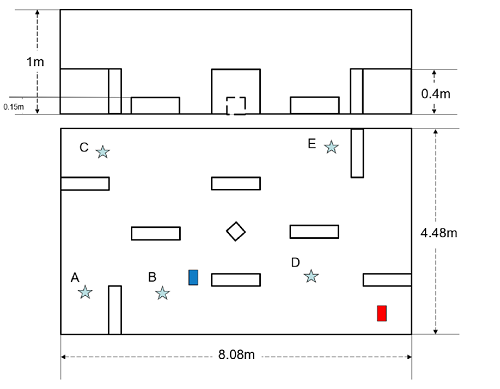

For example, in the figure above, the blue rectangle represents a robot born in any free space of the field. Its goal is to find 5 goals (represented by five stars with different colors in the figure) and activate them in order from 1 to 5. The red rectangle represents the defensive robot, whose initial state is silent. After 5 goals are correctly activated, the defensive wakes up, searches, and shoots the robot. The white rectangle and diamond in the field are obstacles. The robot needs to reduce the collision as soon as possible.

Evaluation Metric

Score = 60×N + A×0.5×(D+H) - T - 10×K

N is the number of goals successfully activated, A is whether the defensive robot is activated, D is the damage to the defensive agent, H is the remaining HP, T is the time taken (in seconds), K = 2 × 𝑇𝑘, and 𝑇𝑘 is the continuous collision time (in seconds).

Leaderboard

To evaluate the performance of the submitted models, we defined the scene generation method to generate 3 levels of test scene. For each level of the scene, 10 trials were conducted in which the goals and the robots are randomly placed in the field according to the specific distribution generated the predesigned scene generation methods.

Track1: the complete information-based track

Simulation Phase

We record the mean number of achieved goals(N) and mean scores of 30 trials of the model under 3 different scenes. The results are shown in the following table. Submitted Time is the latest time of the submissions we received.

It should be noted that the minimum requirement for entering the Sim2real stage is that the mean number of achieved goals(N) is greater than 3.

♠ Rank

| Participant team | Submitted time | Mean activated goals(N) | Mean score |

| Asterism | 2022/6/18 6:52:45 | 5.0 | 659.2 |

| D504 | 2022/6/14 19:03:48 | 5.0 | 610.5 |

| HKU_Herkules2 | 2022/6/16 0:51:05 | 5.0 | 572.8 |

| SEU-Abang | 2022/6/18 1:49:50 | 4.8 | 78.2 |

| SEU-AutoMan | 2022/6/17 23:22:02 | 3.8 | 36.9 |

| KnownAsSuperEast | 2022/6/8 10:41:05 | 3.8 | -8.0 |

| THU_RLC_A | 2022/6/17 13:12:40 | 4.3 | -98.5 |

| QGRFH | 2022/6/9 15:14:49 | 4.7 | -344.8 |

| You are my god | 2022/6/10 17:16:08 | 4.7 | -685.1 |

| HKU_Herkules1 | 2022/6/15 16:06:42 | 3.6 | -3084.7 |

| stay healthy for ddl | 2022/6/8 0:11:51 | 1.8 | -1284.9 |

| King of Dog Point | 2022/6/18 0:26:50 | 2.8 | -3382.4 |

| LuoXiangSaysAI | 2022/6/8 10:08:00 | 2.6 | -4354.3 |

The detailed performances of the submitted models in each scenes are shown in the following table. Navigation time is the taken mean time during activating the goals.

♣ Easy

| Participant team | Navigation time | Mean activated goals(N) | Mean remaining HP(H) | Mean damage(D) | Mean collision time(Tk) | Mean time(T) | Mean score |

| Asterism | 8.7 | 5.0 | 150.0 | 640.0 | 0.1 | 28.4 | 663.9 |

| D504 | 10.0 | 5.0 | 50.0 | 720.0 | 1.4 | 20.3 | 635.7 |

| HKU_Herkules2 | 9.5 | 5.0 | 80.0 | 620.0 | 6.6 | 27.9 | 489.8 |

| QGRFH | 24.1 | 5.0 | 0.0 | 200.0 | 11.8 | 37.3 | 125.7 |

| SEU-Abang | 31.8 | 5.0 | 0.0 | 40.0 | 9.0 | 43.8 | 94.8 |

| SEU-AutoMan | 91.0 | 3.9 | 320.0 | 100.0 | 4.8 | 99.4 | 88.3 |

| KnownAsSuperEast | 69.0 | 4.0 | 160.0 | 180.0 | 15.7 | 101.5 | -86.3 |

| THU_RLC_A | 49.7 | 4.2 | 270.0 | 400.0 | 28.7 | 71.9 | -139.2 |

| You are my god | 39.1 | 5.0 | 0.0 | 30.0 | 23.1 | 75.5 | -222.9 |

| HKU_Herkules1 | 51.1 | 4.6 | 80.0 | 160.0 | 67.3 | 86.4 | -1076.7 |

| stay healthy for ddl | 180.0 | 1.7 | 800.0 | 0.0 | 59.5 | 180.0 | -1268.2 |

| King of Dog Point | 97.8 | 3.8 | 720.0 | 0.0 | 132.1 | 165.6 | -2420.8 |

| LuoXiangSaysAI | 98.0 | 3.5 | 530.0 | 0.0 | 190.3 | 144.4 | -3675.7 |

♥ Moderate

| Participant team | Navigation time | Mean activated goals(N) | Mean remaining HP(H) | Mean damage(D) | Mean collision time(Tk) | Mean time(T) | Mean score |

| Asterism | 13.5 | 5.0 | 70.0 | 610.0 | 0.5 | 33.7 | 595.7 |

| HKU_Herkules2 | 10.5 | 5.0 | 100.0 | 640.0 | 2.4 | 26.1 | 594.0 |

| D504 | 10.7 | 5.0 | 20.0 | 650.0 | 1.2 | 20.1 | 589.6 |

| SEU-Abang | 34.1 | 4.8 | 80.0 | 60.0 | 7.1 | 47.0 | 126.9 |

| SEU-AutoMan | 62.2 | 4.4 | 290.0 | 220.0 | 10.0 | 74.4 | 123.9 |

| KnownAsSuperEast | 83.1 | 4.0 | 280.0 | 180.0 | 11.6 | 107.8 | 8.8 |

| You are my god | 48.5 | 5.0 | 0.0 | 10.0 | 40.0 | 92.5 | -588.6 |

| THU_RLC_A | 64.9 | 4.0 | 360.0 | 410.0 | 53.5 | 79.9 | -646.7 |

| QGRFH | 62.6 | 4.3 | 190.0 | 240.0 | 67.2 | 73.4 | -1025.7 |

| stay healthy for ddl | 180.0 | 2.3 | 800.0 | 0.0 | 58.6 | 180.0 | -1214.4 |

| HKU_Herkules1 | 108.8 | 3.7 | 400.0 | 70.0 | 144.1 | 123.4 | -2710.1 |

| King of Dog Point | 97.2 | 3.6 | 620.0 | 0.0 | 145.3 | 164.8 | -2746.8 |

| LuoXiangSaysAI | 131.3 | 3.2 | 640.0 | 0.0 | 191.3 | 156.4 | -3752.3 |

♦ Hard

| Participant team | Navigation time | Mean activated goals(N) | Mean remaining HP(H) | Mean damage(D) | Mean collision time(Tk) | Mean time(T) | Mean score |

| Asterism | 12.1 | 5.0 | 180.0 | 740.0 | 0.2 | 37.4 | 718.1 |

| HKU_Herkules2 | 14.3 | 5.0 | 120.0 | 660.0 | 1.2 | 31.2 | 634.7 |

| D504 | 11.5 | 5.0 | 20.0 | 670.0 | 0.9 | 20.7 | 606.2 |

| THU_RLC_A | 37.5 | 4.8 | 180.0 | 490.0 | 1.7 | 58.3 | 490.2 |

| KnownAsSuperEast | 92.6 | 3.4 | 400.0 | 150.0 | 6.9 | 126.7 | 53.4 |

| SEU-Abang | 83.1 | 4.6 | 240.0 | 30.0 | 9.2 | 92.5 | 12.8 |

| SEU-AutoMan | 130.9 | 3.3 | 610.0 | 0.0 | 9.9 | 165.8 | -101.5 |

| QGRFH | 46.2 | 5.0 | 0.0 | 180.0 | 23.2 | 59.3 | -134.5 |

| You are my god | 97.9 | 4.3 | 270.0 | 10.0 | 69.9 | 122.5 | -1243.7 |

| stay healthy for ddl | 180.0 | 1.5 | 800.0 | 0.0 | 64.1 | 180.0 | -1372.0 |

| King of Dog Point | 180.0 | 1.3 | 800.0 | 0.0 | 243.8 | 180.0 | -4979.5 |

| HKU_Herkules1 | 135.8 | 2.5 | 570.0 | 90.0 | 275.6 | 153.7 | -5467.4 |

| LuoXiangSaysAI | 180.0 | 1.3 | 800.0 | 0.0 | 276.6 | 180.0 | -5634.8 |

Sim2Real Phase

In the sim2real phase, we evaluated the submitted models in the simulator and the physical environment. We record the mean number of achieved goals(N) and mean scores of 6 trials of the model under 3 different scenes. The results are shown in the following table. Submitted Time is the latest time of the submissions we received.

It should be noted that the minimum requirement for physical test is that the mean number of achieved goals(N) in easy scenes is greater than 3.

The final score is calculated based on simulation score and reality score:

Final score = 0.2 × sim score + 0.8 × real score

♠ Rank

| Participant team | Submitted time | Mean activated goals(N) | Mean score | Final score | ||

| sim | real | sim | real | |||

| D504 | 2022/7/31 16:40:00 | 4.3 | 5.0 | 242.8 | 141.9 | 162.1 |

| HKU_Herkules2 | 2022/7/31 15:17:00 | 5.0 | 3.3 | 366.9 | -3.7 | 70.3 |

| Asterism | 2022/7/30 16:58:23 | 3.0 | 5.0 | 167.5 | 11.4 | 42.6 |

| SEU-AutoMan | 2022/8/1 1:12:00 | 4.0 | 5.0 | 192.8 | 0.7 | 39.1 |

| SEU-Abang | 2022/8/1 2:27:00 | 5.0 | 5.0 | 57.4 | 8.1 | 17.9 |

| You are my god | 2022/7/30 12:02:01 | 4.6 | 5.0 | 158.8 | -66.8 | -21.7 |

| THU_RLC_A | 2022/6/14 17:06:00 | 1.6* | 0.0 | -75.2 | -180.0 | -159.0 |

*The number of activated goals does not meet the requirements of physical test, and the real score is calculated according to the static robot.

The detailed performances of the submitted models in each scenes are shown in the following table. Navigation time is the taken mean time during activating the goals.

♣ Easy

| Participant team | Navigation time | Mean activated goals(N) | Mean remaining HP(H) | Mean damage(D) | Mean collision time(Tk) | Mean time(T) | Mean score | Final score | |||||||

| sim | real | sim | real | sim | real | sim | real | sim | real | sim | real | sim | real | ||

| SEU-AutoMan | 38.7 | 80.8 | 5.0 | 5.0 | 0.0 | 50.0 | 400 | 500 | 0.1 | 11.3 | 49.4 | 155.5 | 447.5 | 192.2 | 243.2 |

| HKU_Herkules2 | 28.6 | 135.4 | 5.0 | 4.5 | 150 | 550 | 400 | 400 | 4.3 | 11.2 | 49.3 | 141.1 | 439.0 | 178.4 | 230.5 |

| You are my god | 34.8 | 33.1 | 5.0 | 5.0 | 0.0 | 450 | 150 | 100 | 8.7 | 11.5 | 42.9 | 180.0 | 156.8 | 164.5 | 163.0 |

| SEU-Abang | 19.7 | 37.4 | 5.0 | 5.0 | 0.0 | 0.0 | 300 | 0.0 | 5.3 | 4.5 | 28.3 | 180.0 | 315.0 | 28.8 | 86.0 |

| Asterism | 16.6 | 63.1 | 5.0 | 5.0 | 0.0 | 0.0 | 350 | 50.0 | 0.6 | 15.2 | 25.2 | 83.1 | 436.7 | -62.9 | 36.9 |

| D504 | 32.2 | 57.1 | 5.0 | 5.0 | 0.0 | 0.0 | 200 | 150 | 2.4 | 18.4 | 43.4 | 74.9 | 309.4 | -68.3 | 7.2 |

| THU_RLC_A | 110.7 | 180.0 | 2.5 | 0.0 | 400 | 800 | 150 | 0.0 | 5.8 | 0.0 | 114.8 | 180.0 | -5.8 | -180 | -145.2 |

♥ Moderate

| Participant team | Navigation time | Mean activated goals(N) | Mean remaining HP(H) | Mean damage(D) | Mean collision time(Tk) | Mean time(T) | Mean score | Final score | |||||||

| sim | real | sim | real | sim | real | sim | real | sim | real | sim | real | sim | real | ||

| D504 | 68.7 | 56.1 | 5.0 | 5.0 | 0.0 | 300 | 350 | 450 | 0.3 | 17.5 | 95.7 | 123.5 | 373.3 | 202.0 | 236.3 |

| HKU_Herkules2 | 26.4 | 178.3 | 5.0 | 3.5 | 0.0 | 800 | 300 | 0.0 | 3.2 | 5.0 | 42.2 | 180.0 | 343.8 | -68.9 | 13.6 |

| SEU-AutoMan | 62.3 | 71.2 | 5.0 | 5.0 | 0.0 | 150 | 0.0 | 750 | 0.7 | 34.3 | 79.1 | 149.5 | 207.5 | -85.9 | -27.2 |

| SEU-Abang | 93.9 | 33.5 | 5.0 | 5.0 | 0.0 | 0.0 | 50.0 | 0.0 | 24.4 | 5.3 | 113.8 | 180.0 | -277.2 | 15.1 | -43.4 |

| You are my god | 42.1 | 42.0 | 5.0 | 5.0 | 0.0 | 100 | 0.0 | 100 | 2.4 | 19.5 | 53.8 | 124.2 | 198.5 | -114.8 | -52.2 |

| Asterism | 180.0 | 71.9 | 1.5 | 5.0 | 800 | 0.0 | 0.0 | 300 | 0.3 | 24.3 | 180.0 | 98.0 | -96.2 | -133.3 | -125.9 |

| THU_RLC_A | 103.9 | 180.0 | 2.5 | 0.0 | 400 | 800 | 150 | 0.0 | 7.1 | 0.0 | 116.1 | 180.0 | -33.5 | -180 | -150.7 |

♦ Hard

| Participant team | Navigation time | Mean activated goals(N) | Mean remaining HP(H) | Mean damage(D) | Mean collision time(Tk) | Mean time(T) | Mean score | Final score | |||||||

| sim | real | sim | real | sim | real | sim | real | sim | real | sim | real | sim | real | ||

| D504 | 114.9 | 61.7 | 3.0 | 5.0 | 450 | 300 | 50.0 | 550 | 0.2 | 15.9 | 180.0 | 115.7 | 45.8 | 292.3 | 242.9 |

| Asterism | 108.7 | 99.6 | 2.5 | 5.0 | 800 | 50.0 | 0.0 | 400 | 0.4 | 9.0 | 180.0 | 114.3 | 162.2 | 230.6 | 216.9 |

| SEU-Abang | 33.9 | 42.0 | 5.0 | 5.0 | 0.0 | 0.0 | 50.0 | 0.0 | 7.2 | 6.9 | 47.2 | 180.0 | 134.4 | -19.6 | 11.2 |

| HKU_Herkules2 | 41.4 | 178.4 | 5.0 | 2.0 | 0.0 | 800 | 350 | 0.0 | 5.1 | 3.6 | 55.5 | 180.0 | 317.9 | -121.1 | -33.3 |

| SEU-AutoMan | 180.0 | 105.9 | 2.0 | 5.0 | 800 | 350 | 0.0 | 600 | 0.8 | 36.2 | 180.0 | 155.9 | -76.6 | -104.3 | -98.7 |

| You are my god | 110.5 | 54.2 | 4.0 | 5.0 | 400 | 150 | 100 | 100 | 2.7 | 26.3 | 115.6 | 149.7 | 121.0 | -250.4 | -176.1 |

| THU_RLC_A | 180.0 | 180.0 | 0.0 | 0.0 | 800 | 800 | 0.0 | 0.0 | 0.3 | 0.0 | 180.0 | 180.0 | -186.6 | -180 | -181.3 |

Track2: image-based track

♠ Rank(Simulation Phase)

| Participant team | Submitted time | Mean activated goals(N) | Mean score |

| D504 | 2022/6/18 1:55:00 | 5.0 | 409.3 |

The detailed performances of the submitted models in each scenes are shown in the following table. Navigation time is the taken mean time during activating the goals.

♣ Easy

| Participant team | Navigation time | Mean activated goals(N) | Mean remaining HP(H) | Mean damage(D) | Mean collision time(Tk) | Mean time(T) | Mean score |

| D504 | 13.2 | 5.0 | 530.0 | 60.0 | 4.0 | 52.5 | 462.2 |

♥ Moderate

| Participant team | Navigation time | Mean activated goals(N) | Mean remaining HP(H) | Mean damage(D) | Mean collision time(Tk) | Mean time(T) | Mean score |

| D504 | 18.5 | 5.0 | 590.0 | 40.0 | 6.4 | 54.3 | 432.3 |

♦ Hard

| Participant team | Navigation time | Mean activated goals(N) | Mean remaining HP(H) | Mean damage(D) | Mean collision time(Tk) | Mean time(T) | Mean score |

| D504 | 19.9 | 5.0 | 310.0 | 130.0 | 6.8 | 49.3 | 333.3 |

Final Winners

Track1

| Participant team | Ranking | Prize |

| D504 | First place | Grand prize |

| HKU_Herkules2 | Second place | First prize |

| Asterism | Third place | Second prize |

| SEU-AutoMan | Fourth place | Third prize |

| SEU-Abang | Honorable prize | |

| You are my god | ||

| THU_RLC_A |

Track2

| Participant team | Prize |

| D504 | Honorable prize |